Cyber Criminals Are Using A.I. to Supercharge Their Scams

Last updated July 1, 2025

Criminals are weaponizing artificial intelligence (AI) to make their scams more convincing. It’s increasingly difficult to distinguish between what’s real and what’s fake.

Click below to listen to our Consumerpedia podcast episode.

The FBI’s Internet Crime Complaint Center received 859,500 complaints about suspected cybercrimes last year, resulting in losses that exceeded $16 billion, a 33 percent increase from 2023. Much of that fraud was enabled by AI.

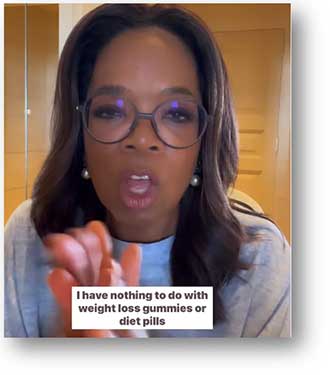

AI has been used to create deepfake celebrity endorsements that appear to be from Elon Musk (cryptocurrency), Tom Hanks (dental insurance), Taylor Swift (skincare and beauty products), and Kenau Reeves (CBD oil). So many people were exposed to a bogus ad featuring Oprah Winfrey’s likeness that she had to post a video on Instagram warning that it wasn’t her.

AI has been used to create deepfake celebrity endorsements that appear to be from Elon Musk (cryptocurrency), Tom Hanks (dental insurance), Taylor Swift (skincare and beauty products), and Kenau Reeves (CBD oil). So many people were exposed to a bogus ad featuring Oprah Winfrey’s likeness that she had to post a video on Instagram warning that it wasn’t her.

Digital security consultant Brett Johnson, a convicted cybercriminal who was once on the Secret Service’s 10 Most Wanted List, said AI allows fraudsters to “fine-tune their attacks and scale up the volume,” making cybercrime “much easier to commit and much more profitable.”

Fraudsters with limited cyber skills can use black-market chatbots, such as “FraudGPT” and “WormGPT” to do all the heavy lifting, he said, creating phishing emails without typos or awkward wording and with fake images or videos that look strikingly real.

A new report from the Consumer Federation of America (CFA) warns AI can create images and videos for social media that “make fraudulent messages appear to be authentic and trustworthy.” One example: using AI-generated images as clickbait for charity scams.

After natural disasters, fraudsters have been disseminating AI-generated images to elicit an emotional response and a generous donation to a fictional charity—themselves. The AI-generated image below, designed to resemble a screenshot of a local newscast in Huntsville, Ala., went viral last year, prompting the real WAFF-TV to issue a scam alert.

Experts Warn AI Lets Cyberthieves Perfect Their Scams

For a recent episode of Checkbook’s Consumerpedia podcast, I was joined by two cybersecurity experts: Ben Winters, director of AI and privacy at the Consumer Federation of America (who wrote the CFA report), and Kolina Koltai, a senior researcher at Bellingcat, an investigative journalist group based in the Netherlands.

Here are excerpts of our conversation, lightly edited for clarity and brevity:

Checkbook: AI has shown significant improvement over the last year or so. How important is this technology for bad actors?

Kolina: I think it’s an incredibly powerful tool. If I wanted to switch careers to become a fraudster or a scammer, I would 100 percent be using various AI tools in my repertoire. It’s made everything so much easier for criminals; they’re able to scam people in a variety of ways. And it’s made our ability to catch them a lot harder.

Ben: What it mainly does is amplify the bad behaviors that we see from scammers. It makes it easier to do, harder to detect, and a little bit more personalized.

Kolina: And the amount of damage a singular person can do has exponentially increased with the availability of AI technology.

Checkbook: Sometimes, computer-generated images are easy to spot: A hand with six fingers and tables or chairs floating in the air without legs, or skin that doesn’t look natural—but not always. Artificial intelligence has advanced so rapidly that it’s now possible for anyone, especially scammers, to create deepfake videos that can pass as genuine and share them on TikTok, Facebook, or other social media platforms.

Kolina: We’re encountering a lot more inauthentic content in a lot of different ways, in ways that people aren’t necessarily prepared for.

Ben: Yeah, it works to get your attention. And it’s hard to know whether it’s legitimate or not. In an ideal world, consumers should not have to be constantly vigilant about whether something is real or not, but you must be nowadays.

Checkbook: And now deepfake videos can take place in real time, something that wasn’t possible a year ago, which will make romance scams more profitable for the bad guys. They can video chat with their target, and the AI will display whatever image they want (a beautiful woman, a handsome man) with the corresponding voice.

Ben: With romance scams, fraudsters are already using AI for texting on dating or messaging apps. In the past, you could assume that if you got them on the phone or saw them on a video chat, you could verify that they’re a real person. That’s now thrown into question because of the increasing availability of real-time video and audio masking.

Checkbook: Kolina, you told me that your 82-year-old father has tried online dating, and he’s fallen for romance scams that involved AI.

Kolina: Yes. He’ll ask the women to send him a photo of their ID, such as their driver’s license, to prove that they’re real. And when I look at them, they’re photoshopped. But sometimes, he’ll get a video and say to me, “I talked to them on video, I talked to them.” And as much as I would like to try to prove that some of these women are not real, it becomes difficult to convince someone, particularly when they’re emotionally involved. How do you convince someone who’s seeing what they see? I think it is dangerous because it’s not limited to seniors. Romance scams happen to people of all ages.

Guardrails Needed

AI is like a racetrack with no bumpers and no speed limit. The technology has amazing potential for diagnosing diseases and personalizing learning, but it’s also empowering bad actors to do more harm.

The Consumer Federation of America wants Congress to create guardrails that would limit the criminal use of AI. This would include new privacy and data transparency protections, as well as amending current law to hold AI companies accountable for the content generated by their algorithms.

“AI companies should be liable for the outputs of their systems, especially when those outputs can lead to real-world harm,” said Susan Weinstock, CFA’s president and CEO. “It’s the responsibility of AI platforms and programs to ensure their systems do not contribute to societal issues.”

How to Protect Yourself

The rules for protecting yourself against all fraudsters remain the same, whether they’re using AI or not. Slow down, don’t believe everything you see or hear, and assume everything can be faked.

“Be skeptical and think before you click, before you text, definitely before you send money,” said Lorrie Cranor, director of the CyLab Usable Privacy and Security Laboratory at Carnegie Mellon University. “Is this plausible in this situation? Don’t rush to give away personal information, passwords, money, bank account numbers, anything like that.”

If you’re not sure, Cranor suggests getting a second opinion. Ask a friend or relative to review the ads or message before you respond. If your gut is telling you this doesn’t seem right, move on.

Additional Resources:

- Scamplified: How Unregulated AI Continues to Help Facilitate the Rise in Scams

- Don’t Get Scammed! Tips For Spotting AI-Generated Fake Products Online

More from Checkbook:

- Identity Theft and Fraud: How to Protect Yourself

- Fraud Alert: Criminals Pitch Bogus Cryptocurrency Investments

- Social Media Scams Are Skyrocketing. Here’s How to Protect Yourself

Contributing editor Herb Weisbaum (“The ConsumerMan”) is an Emmy award-winning broadcaster and one of America's top consumer experts. He has been protecting consumers for more than 40 years, having covered the consumer beat for CBS News, The Today Show, and NBCNews.com. You can also find him on Facebook, Blue Sky, X, Instagram, and at ConsumerMan.com.